Analytics Blog

Top 8 Digital Analytics Industry Trends 2019

At the end of every year, our industry, like all industries, carefully makes predictions about trends for the new year. These predictions generally include thoughts around the top challenges organizations will be facing, which solutions they’ll be allocating their budgets to, which technologies will they be investing in to build out their technology stack, or what new methodologies will they be deploying to improve their business.

As 2019 forges ahead, the digital analytics industry continues to grow and change. Through our work with clients, and immersion in the industry, we’ve taken a look at the analytics industry trends that are currently top-of-mind for many.

- Trend #1: Deeper Digital Transformation (DX)

- Trend #2: Importance of Data Curation (Self-Service Analytics)

- Trend #3: Custom Data Integrations (ETL/Data Warehouse)

- Trend #4: Rise of the Customer Data Platform (CDP)

- Trend #5: Focus on Data Ownership (Clickstream Solutions)

- Trend #6: Continued Data Privacy Attention

- Trend #7: Migration to Server-Side Tagging

- Trend #8: Leveraging Server-Side Testing / Optimization

Awareness of these trends can open up new possibilities, address your most pressing challenges, and help EVOLVE your organization.

Trend #1: Deeper Digital Transformation (DX)

Digital Transformation has increasingly made its way to the forefront of many board rooms these days, and there’s good reason for it. While many organizations have made tremendous progress, in their Digital Transformation, there are still massive gaps in execution, integration, and benefit to end-users.

Gartner market insights from December 2018 showed that “67% of business leaders say their company will no longer be competitive if it can’t be significantly more digital by 2020.”

You have likely been apart of conversations discussing Digital Transformation, but it is often discussed differently in different circles and is farther reaching than most realize. Simply put, Digital Transformation is a shift in the mindset of how technology and processes are used to impact business performance and ultimately increase value to your customers.

Now, let’s unpack this.

The Driving Forces Behind DX

Exceeding Your Customers Expectations

Customers have the power of information and choices at their fingertips. They expect better, and personalized, experiences from brands they interact with. They know that if you can’t give them what they want, another brand likely will. Therefore, the more you can deliver the right message, to the right person, at the right time, the more you’ll be able to meet, and exceed, your customers expectations.

Customers have the power of information and choices at their fingertips. They expect better, and personalized, experiences from brands they interact with. They know that if you can’t give them what they want, another brand likely will. Click & Tweet!

Technology is the driving force behind your ability, or inability, to deliver the right experiences to your customers. By embracing the proper tools to improve your customer experience, the results you can expect are:

- Greater customer satisfaction

- Increased customer acquisition

- Higher revenue and profit

- Stronger customer trust

- Consistent evolution

Increasing Your Competitive Advantage

While making your customers happy will ultimately improve your bottom line, you also need to stay ahead of your competition. A crucial element is empowering your team with the optimal MarTech stack. You may use your existing tech stack to help shift to a new business model, or optimize and augment your existing operations. By focusing on staying ahead of your competition, you can work to create a culture of continuous evolution.

Making DX A Reality

Unfortunately, there’s no playbook or checklist for you to follow in order to achieve Digital Transformation, however, some quick examples some organizations have taken include:

- Mobile ordering

- Voice integrations (Alexa)

- Inventory logistics based on predictive forecasting

- Collecting and leveraging the “right” data (as we often see the wrong data being used)

![]() Tip: Don’t implement any of the above just for the sake of checking a box or running after that shiny new digital technology. You need to assess your customers to understand their demand for it and then have a commitment to continuously support and optimize these solutions.

Tip: Don’t implement any of the above just for the sake of checking a box or running after that shiny new digital technology. You need to assess your customers to understand their demand for it and then have a commitment to continuously support and optimize these solutions.

There are so many ways in which an organization can fully and deeply embrace Digital Transformation; however, the one thing to keep in mind is that it does require cross-departmental collaboration. Digital innovators rewrite the rules of business (think Amazon), and without deep Digital Transformation, your business will not be able to meet your customers expectations, stay competitive, and excel.

Trend #2: Importance of Data Curation (Self-Service Analytics)

Trend #2: Importance of Data Curation (Self-Service Analytics)

In 2017, Intel CEO Brian Krzanich famously said, “data is the new oil.” The parallel was made because in the 1900’s oil changed entire industries and, as a result, the world. Today, data is doing the same.

In 2017, Intel CEO Brian Krzanich famously said, “data is the new oil.” The parallel was made because in the 1900’s oil changed entire industries and, as a result, the world. Today, data is doing the same.

Only through the collection, analysis, and research of data can organizations do more of what works and less of what doesn’t. This is no longer only available to huge corporations that have vast amounts of budgets and resources. That all began to shift years ago with the introduction of free analytics platforms (Google Analytics Standard), open source advanced analytics platforms (Snowplow Analytics), and free or low cost data visualization tools such as Google Data Studio. Tools such as these have given smaller companies the ability to dig into the data and identify opportunities or niches that disrupt entire industries, taking huge chunks out of larger companies before they even saw it coming (think Square vs. PayPal).

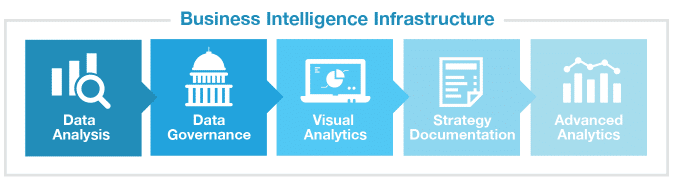

The volume, velocity, and variety (the three V’s of data) have been rapidly increasing. Self-Service Analytics is how your organization can wrangle your data sources and make them accessible and trustworthy to more people in your organization.

What is Self-Service Analytics?

The truth is, to keep up, organizations need to be more agile. Decision makers can’t afford to wait for the IT team to gather data, process reports, and have analysts report on findings. A self-service analytics approach gives business users direct access to company data and allows them to make data-driven decisions in real-time. It also means that you can get up and running, and realize ROI, relatively quickly.

Other benefits of self-service analytics include:

- Empowering the people within your organization to answer their own questions, share insights and make data-driven decisions — accurately.

- Increase accessibility of quality and useful data throughout the organization. If you have business users asking your analysts how many visitors came to the site last month, you are doing it wrong.

- Increasing the accuracy of data-driven decisions and business user’s value from it (data literacy).

- Freeing up analysts to spend time on more impactful analysis.

If you want to become a data-driven organization and are looking to increase data curation, here are a few tools and technologies you should explore:

- Event Analytics Platforms: Mixpanel, Snowplow Analytics

- Traditional Digital Analytics Platforms: Adobe Analytics, Google Analytics 360

- Data Warehouses: AWS Redshift, Snowflake, Google BigQuery

- Data Visualization & Dashboards: Domo, Looker, Tableau, Power BI, Adobe Analysis VRS/Workspace (only works with Adobe Analytics data)

However, the question often becomes, how do we implement and use self-service analytics while also ensuring security and data integrity? Many find they don’t know the answer, and because of that there’s been a bit of a backlash against self-service analytics recently. As with many buzzwords like Self-Service Analytics, you need to take a pragmatic approach and realize that technology alone is not the magic bullet. It takes considerable effort and investment in people and processes to make this a reality.

The good news is that if you can ensure the data is correct, and that someone can easily interpret the output, self-service analytics will undoubtedly improve your insights and help grow your business.

…self-service analytics will undoubtedly improve your insights and help grow your business. Click & Tweet!

Trend #3: Custom Data Integrations (ETL/Data Warehouse)

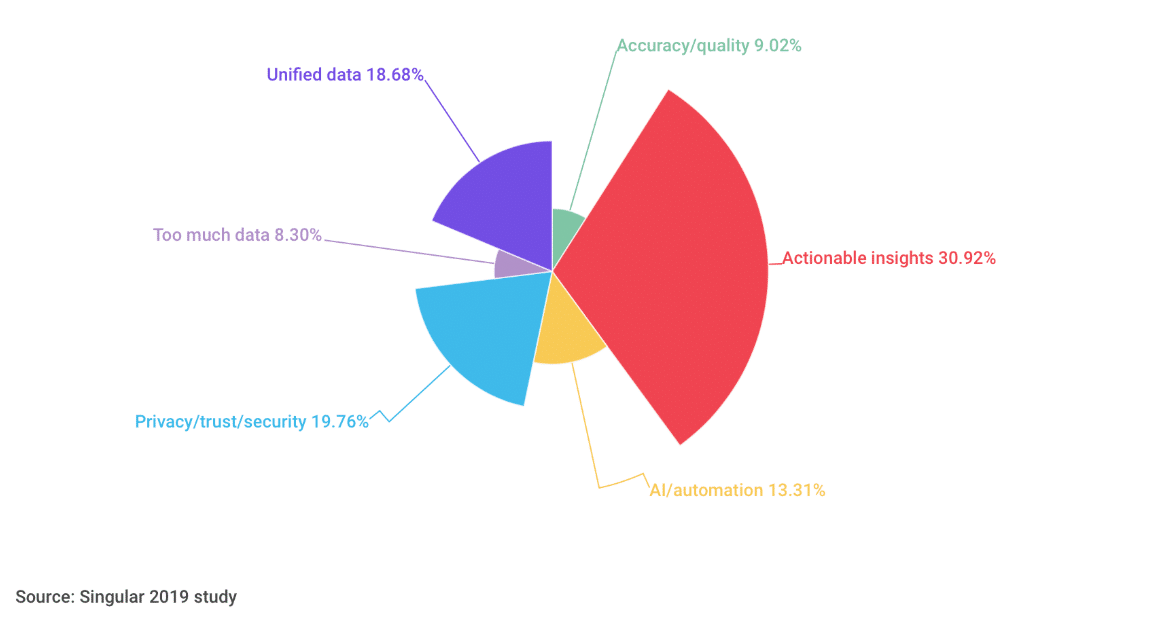

This year, a top priority for CMO’s is figuring out how to gain actionable insights from their vast amounts of data. As SurveyMonkey CMO Leela Srinivasan points out, “With the exponential growth of data over the past decade and into the new year, it’s becoming harder daily to turn information into action.”

One way to help glean valuable insights from your mountains of data is through a practice of rigorous data management, including custom data integrations such as data warehousing. Without it, your organization is likely using bad data and missing opportunities to gain a complete view of your customer, evaluate company performance, trends, operational efficiencies, competitive benchmarking, and other valuable insights.

How Do Custom Data Integrations Work?

More and more we are seeing organizations ask the right questions, but not have the ability to access the data they need to answer them. Sometimes those questions require a specific data set, but the system it’s on won’t let their analysts access it in the way they need. Or, the answer requires analysis of multiple data sets, housed in siloed databases that aren’t integrated.

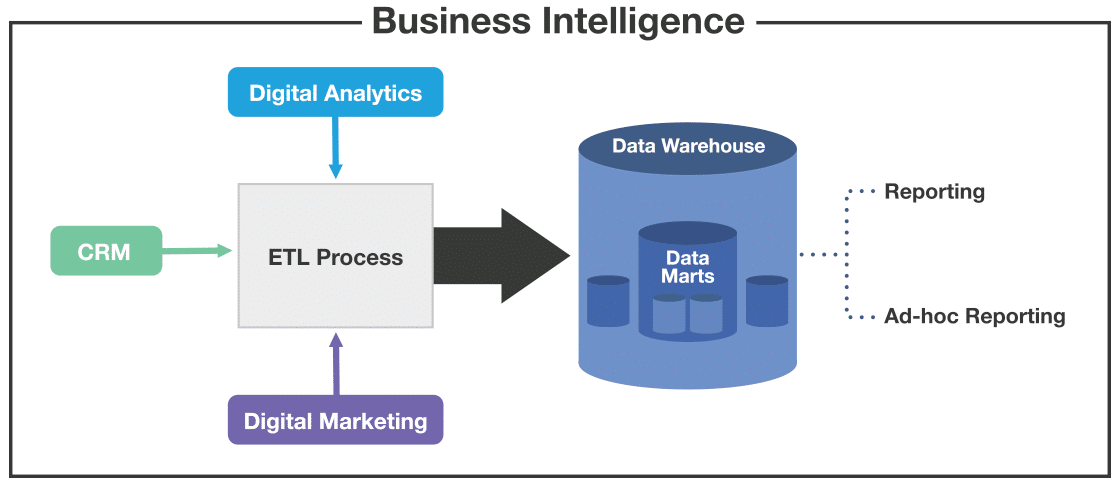

If your data is structured this way, it’s losing its value. This is why data warehouses exist!

A data warehouse acts as a central repository where an enterprise stores all of its data from multiple sources. Data is moved from individual databases into the data warehouse by a process called ETL (Extract, Transform, Load or Extract, Load, Transform which is becoming the recommended standard). Essentially, you are extracting the data from its existing database, transforming the data to fit into a predetermined schema based on your reporting needs, and then loading that data into your data warehouse. Usually, data flows from a transactional database into a data warehouse on a daily, weekly, or monthly basis. This process can be setup to happen automatically, ensuring you have current, and consistent, data.

According to a 2019 Business Application Research Center (BARC) data preparation survey, the top 3 drivers for data preparation in North America are:

- Higher expectations of a tangible business impact and increased competitiveness through analytics

- Growing number of data sources with increasing volume, velocity and variety of data

- Higher expectations in terms of performance, agility and flexibility in business departments

The Benefits of a Data Warehouse

Enhanced Business Intelligence

By joining data from multiple sources, executives will be able to answer their business questions, and will no longer be making crucial decisions on limited or incomplete information. Branding decisions like product positioning, pricing, and distribution as well as strategic decisions such as goals, priorities, and company direction can be made with confidence using consistent data.

Increased Data Quality & Consistency

Moving data from siloed databases into a data warehouse involves transforming your data from its existing format into a common format. This means that all of your data will be properly processed and formatted, creating data consistency. If all business units are utilizing the same data warehouse for reporting, insights and results will align across the entire organization.

High Return on Investment (ROI)

A study by the International Data Corporation found that data warehouse implementations have “generated a median five-year return on investment of 112% with a mean payback of 1.6 years.” With all of the options available to create a data warehouse it’s possible these days to keep costs low, reduce the resources required to manage and maintain it, and allow for rapid implementation with tremendous flexibility.

Retains Historical Data

A data warehouse can store large amounts of historical data as well. Unlike a transactional database, this gives you the ability to do things like isolate specific time periods or identify trends that you otherwise wouldn’t be able to.

Increased Ability to Mine Your Data & Perform Advanced Analytics

By building and utilizing a data warehouse you can use any tool you desire to access, analyze, and report on your data. You can connect directly to the warehouse from most data visualization tools, which means your analysts will be spending less time preparing the data, and more time doing actual analysis to get you the insights you need.

Trend #4: Rise of the Customer Data Platform (CDP)

Trend #4: Rise of the Customer Data Platform (CDP)

This Forbes article highlights that 73% of all people point to customer experience as an important factor in their purchasing decisions, just behind price and product quality. Yet only 49% of U.S. consumers say companies provide a good customer experience today.

It also points out that almost one in three consumers (32%) will leave the brand they love after just one bad customer experience. Prior to the digital age, your customers may have overlooked a bad experience, but today’s consumers expect personalization, and today’s marketers better be focused on meeting those expectations. So, how can you make this a reality?

What Is A Customer Data Platform (CDP)?

In the past you may have collected multiple layers of information about a single customer, but you would have been unable to connect them all without a central point of control. This led to many data sources within organizations being used ineffectively, or not at all.

A Customer Data Platform is capable of collecting data from multiple online and offline interactions and matching them to a single customer profile. One of the best features of CDPs is their ability to profile interactions from anonymous customers and retroactively tie that data to a customer once they are identified (reverse stitching). A CDPs ability to stitch customers across devices increases the likelihood that you’ll deliver a compelling message.

A CDPs ability to stitch customers across devices increases the likelihood that you’ll deliver a compelling message. Click & Tweet!

Once those profiles are created, organizations target audiences via connected marketing technologies to deliver the incentives and experiences that best meet those customers needs and wants based on their prior activity. Common use cases include remarketing, email campaigns, SMS, and personalization.

Features of a CDP:

- Data Types: Personally Identifiable Information (PII), 1st, 2nd, 3rd party, anonymous, Data Management Platform data and interaction history

- Data Sources: Structured, unstructured, semi-structured

- Data Detail: CDPs capture clickstream data and are capable of storing historical information in large capacity

- Use Cases: Single view of customer (1:1)

- Measure: Continuous and real-time

- Activation: Direct customer engagement

- Identity: Anonymous and known

Examples of CDPs on the market today include:

- Lytics: removes complexity to help marketers serve smart and relevant experiences to individual customers.

- Tealium AudienceStream: combining identity resolution, data enrichment capabilities and robust audience management.

- Adobe Audience Manager (AAM) — AAM is technically a Data Management Platform, but is a cross-over into a CDP.

You wouldn’t be able to buy someone the perfect gift after a first date, right? It takes time to get to know someone before you find yourself completing their sentences. In much the same way, companies can’t expect to truly understand their customers from a single touchpoint. They have to be able to collect data across all customer journey touchpoints and use that data to provide the right experiences.

Trend #5: Focus on Data Ownership (Clickstream Solutions)

Trend #5: Focus on Data Ownership (Clickstream Solutions)

Analytics platforms collect what is called “raw data.” Every time a visitor views a webpage or app screen, or takes an action that is being tracked, a “hit” is recorded. A hit is simply a row of raw data that contains rich information about the hit and the visitor. A typical hit can include information such as: date, time, user ID, browser, operating system, country, city, requested URL, hostname, etc. This collection of raw data is also called clickstream data.

Today’s most popular analytics platforms, such as Adobe Analytics and Google Analytics, then take that raw data and “process” it to pull out the rich, aggregate information for reporting purposes. So, what you’re seeing in your reporting interface is processed (i.e. grouped/aggregated) data.

…by not utilizing your clickstream data, you’re reducing your analysis opportunities and the value it can provide. Click & Tweet!

You can glean some great insights from that processed data, but by not utilizing your clickstream data, you’re reducing your analysis opportunities and the value it can provide.

The Benefits of Clickstream Data Analysis

Today, fairly simple business questions are are easily answered in most analytics tools, such as:

- Who’s visiting my website?

- What are the top sources of traffic to my site?

- Which devices do visitors use to access my site?

- Which marketing channel has the highest conversion rate?

- Which pages have the highest bounce rate?

- Where are most users bailing in my sales funnel?

However, as your organization matures, your ability to mine data should mature along with it. In order to evolve your analytics maturity, you’ll need highly granular, user-level data that allows you to answer more valuable business questions.

Some of the most popular applications of this data include:

- Data Integrations with Other Data Sources

- Customer Analytics

- Catalog Analytics

- Prediction Modeling

- Attribution Modeling

- Cohort Analysis

- Customer Lifetime Value Analysis

- Real Time Product Recommendations

- Pathing Analysis

How Can I Collect and Analyze My Clickstream Data?

The best way to analyze your clickstream data would be to collect and store it in a data warehouse. This may or may not be possible based on your current analytics platform, and whether or not they provide access to the raw unprocessed data.

Here’s a quick breakdown for some of today’s most popular tools.

- Google Analytics Standard (free version): No direct access to the clickstream data.

- Google Analytics 360: You can enable the Google BigQuery integration, which will make the clickstream data available to you on the Google Cloud Platform (GCP).

- Adobe Analytics: If you use Adobe Analytics, you can have your clickstream data automatically delivered via FTP/S3 in multiple zipped files.

While the paid platforms of Google Analytics 360 and Adobe Analytics offer clickstream data, there are limitations such as the inability to directly collect PII (Personally Identifiable Information), the inability to reprocess data historically, and often the lack of real-time clickstream data.

To overcome the limitations of Google Analytics and Adobe Analytics, some organizations have moved towards owning the entire infrastructure. One tool we use to help our clients achieve this is Snowplow Analytics. Snowplow is an event analytics platform that runs on your own cloud infrastructure (that you directly own/manage). This platform allows you to collect your raw clickstream data in real-time and store it in a data warehouse. This data can then be analyzed using any tool or data visualization solution. Snowplow can be implemented in addition to your existing analytics platform, augmenting your ability to answer business questions.

Data is an extremely valuable asset, perhaps the most valuable for organizations these days. Click & Tweet!

Data is an extremely valuable asset, perhaps the most valuable asset for organizations these days. Its applications are endless, and if you can create an infrastructure to answer your most important business questions, you will create a clear competitive advantage.

Trend #6: Continued Data Privacy Attention

Trend #6: Continued Data Privacy Attention

The General Data Protection Regulation (GDPR) is a European Union (EU) data privacy regulation that puts the customer/individual in control. The purpose is to consolidate privacy regulations across the EU, including monetary administrative penalties of €20 million or 4% of worldwide revenue if your organization is not in compliance (even for US companies with EU customers).

GDPR went into effect on May 25, 2018. Even though this is regulated from the EU, it impacts businesses from the US and other locations that are doing business with EU citizens. So, yes, this will affect you even if your company is based solely in the US, but has EU customers.

The full details of the GDPR are overwhelming and the regulations and laws attached to it are still evolving. In most cases, they’re evolving quicker than organizations can adapt to keep up, causing a greater chasm between what consumer want and what brands can deliver. A 2018 poll of 1,000 US adults by ExpressVPN found that consumers are skeptical when it comes to data privacy, showing that 71% of respondents said they were concerned about how marketers collect and utilize their personal data.

GDPR Is The Real Deal

Many marketers weren’t taking GDPR as seriously as they should. This is somewhat understandable since other attempts at giving customers control, such as the Do Not Track (DNT) browser setting, had been largely ignored by marketers for years without any consequences.

However, less than a year into GDPR and we are already seeing very real GDPR enforcement penalties being dropped on major brands:

- Google was fined $57 million under GDPR (the new European data privacy law).

- Facebook could face $1.63bn fine under GDPR over latest data breach. Facebook was fined £500,000 under the Data Protection Act for the Cambridge Analytica scandal but may not get away so lightly this time.

While GDPR affects US based companies who are collecting EU data, there has been movement in the US to increase data privacy as well. Several US states are proposing their own data protection laws that provide certain GDPR-like consumer rights with the first passing in June 2018 in California.

What Is The California Consumer Privacy Act (CCPA)?

The California Consumer Privacy Act (CCPA) is a response to the Cambridge Analytica scandal, and is slated to become the most comprehensive data privacy law in the US. The Act goes into effect on January 1, 2020, with the main objective of preventing the sale of consumer information. The Act affects anyone doing business with California residents that satisfies one or more of the following criteria:

- Gross annual revenue above $25m

- Annually buys, receives for the business’ commercial purposes, sells, or shares for commercial purposes, the personal information of 50,000 or more consumers, households, or devices

- Generates at least 50% of annual income from selling information of California residents

Like GDPR, the CCPA provides certain rights to consumers. Some highlights of the Act include:

- Consumers right to obtain records of personal information

- Requires an opt-out link on the companies’ website, to allow consumers to opt out of data sharing to third parties

- Consumers right to data deletion or sale of information (companies impacted will have a ‘Do Not Sell My Personal Information’ link in the footer)

- Mandatory opt-in for selling of personal data of minors

- Expands the definition of personal information to include not only traditional personally identifiable information, but also such far ranging categories as purchasing history or tendencies, records of products or services provided, biometric data, geolocation data, and “audio, electronic, visual, thermal, olfactory, or similar information,” “psychometric information” and any inferences drawn from any such information

- Right to sue a company that uses their stolen data (or from data breach)

- Permits a private right of action in the case of data breaches and allows for administrative penalties to be imposed by the California Attorney General of up to $7,500 per violation with no maximum cap

While the CCPA seems to offer great protections for consumers, after carefully studying it, you can see that the “walled gardens” like Google & Facebook are not impacted since they aren’t selling consumer information (they directly own your data and use it accordingly, but they aren’t directly selling it to other parties). In terms of the larger impacts to the Digital Marketing industry, the CCPA more impacts companies like LiveRamp and the broader DMP/ad-tech market.

Eleven other states have recently introduced similar legislation. The bills include their own versions of opt-out rights and are slightly different than the GDPR and CCPA. Understanding, preparing, and implementing a privacy framework that would comply with multiple legislations is complex, leading many to ask the US Congress to implement a national comprehensive data privacy legislation.

The sooner you are GDPR/CCPA compliant, the more likely you will be seen as a leader who cares about your customers. Click & Tweet!

While many are are slow to address the new privacy legislation, it is a clear opportunity for your organization to build brand affinity. The sooner you are GDPR/CCPA compliant, the more likely you will be seen as a leader who cares about your customers.

Trend #7: Migration to Server-Side Tagging

Trend #7: Migration to Server-Side Tagging

Tag management systems (TMS) are critical applications in today’s digital ecosystem. A tag management system makes it simple for you to implement, manage, and maintain marketing tags on your websites within one interface. Examples of these include:

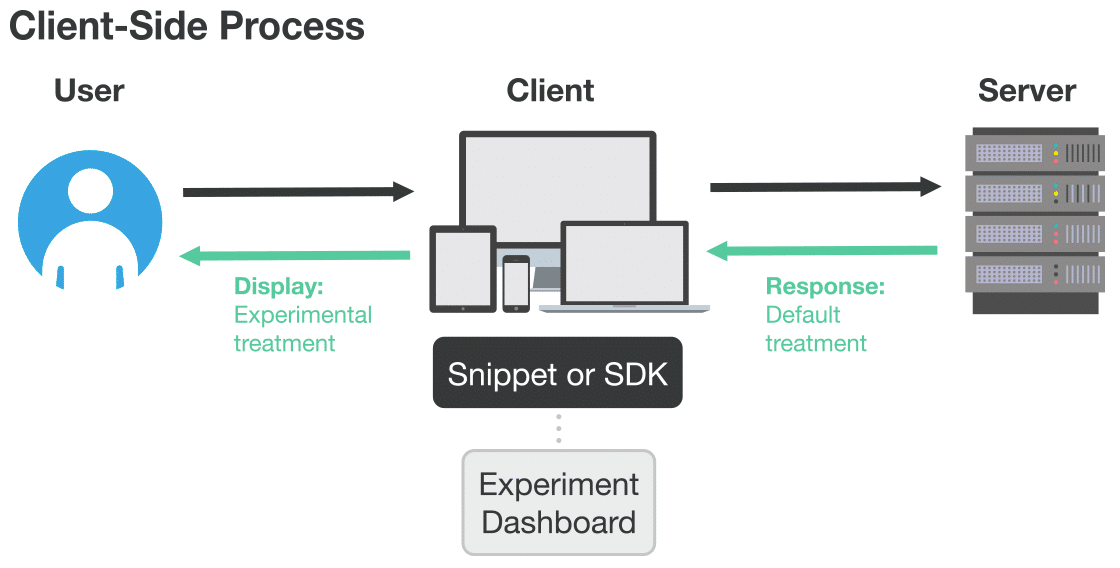

The traditional approach with a Tag Management System is to operate it through a client-side approach. In this configuration, a single JavaScript file is continuously updated to load and execute other client-side JavaScript directly onto your website.

A different configuration to consider (that is increasing in popularity), is a server-side Tag Management System. A server-side TMS typically still has a single client-side JavaScript library, but instead of loading and executing additional code directly on the client-side, data requests are routed to the TMS and then sent out to the analytics and marketing technologies via a server-side process. In short, network requests are handled by the TMS instead of by the visitor’s browser.

The Benefits of Server-Side Tagging

Certain tag implementations are only available client-side, but server-side tag management implementations offer numerous advantages:

- Increased Security/Privacy: With less code and cookies involved, you limit the chance of someone’s privacy being compromised. You also don’t have to load any 3rd party libraries so you can avoid the headache that companies like Ticketmaster and British Airways had to endure when their 3rd party scripts resulted in data breaches for a large number of their customers.

- Data Integrity: You can use your existing implementation for any supported vendor, which means you’re using a consistent, reusable data value format.

- Data Ownership: You can send your data, not only to multiple vendors at one time, but you can also send it to your own database, collecting that valuable clickstream data we talked about earlier.

- Improved Performance: Instead of your TMS loading a plethora of tags on every single page you only have to load one tag, significantly reducing page load time.

- Reliability: The reduced amount of data management allows you to focus on the transmission of data, and server-side tagging isn’t affected by things such as connection interruptions or ad blockers.

- Prevent Mobile App SDK Bloat: Your app developer doesn’t want to add SDKs every time you decide to work with a new analytics or marketing technology. When you have a server-side solution, you can easily route the right data to the right place without adding a new SDK that will decrease performance.

A server-side TMS approach delivers a highly-scalable solution that allows marketers to add new vendors without needing to worry about performance and client-side security concerns.

Server Side Tag Management Solutions & Limitations

There are several products that provide a server-side solution. Many of our clients leverage either Tealium iQ + EventStream or Segment.

Not all tags support a server-side deployment. This is due to how they collect and process data today (in a client-side dependent way). With ad-tech vendors, this is often due to the direct reliance on cookies (primarily 3rd party cookies). With this said, more and more analytics and marketing tags are beginning to support this method of deployment, including: Criteo, Google Ads, Facebook, Salesforce DMP, Sizmek, Google Analytics, Mixpanel, and Adobe Analytics.

When you decide to navigate towards a server-side tagging solution, it is vital to properly understand and plan the deployment. Data governance also comes into play here, as you’ll need to have clear policies on who can add a tag and whether client-side tags are even allowed on the website.

Trend #8: Leveraging Server-Side Testing / Optimization

Trend #8: Leveraging Server-Side Testing / Optimization

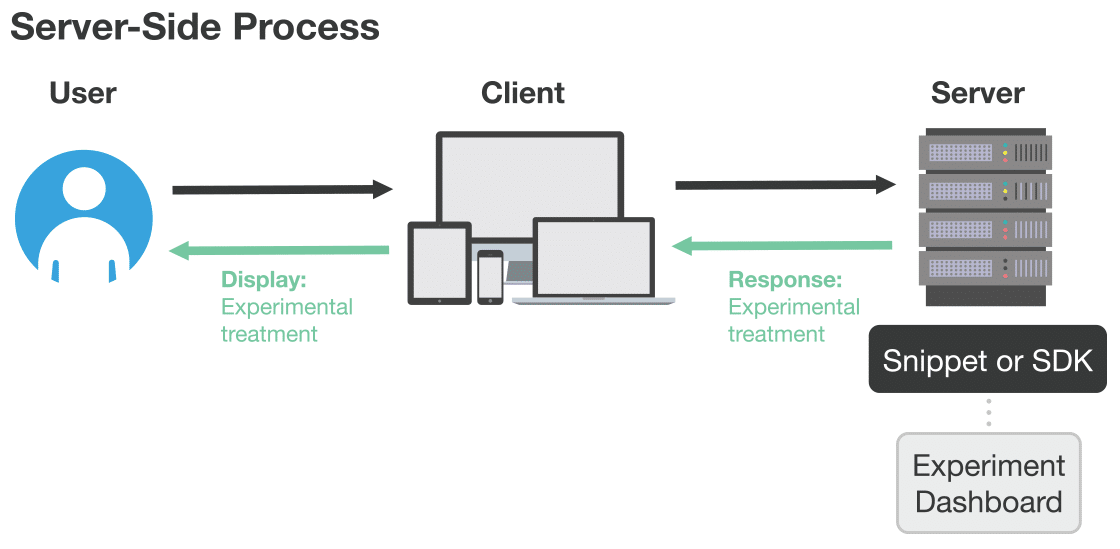

Website Optimization efforts are typically carried out via client-side methods. Platforms such as Optimizely Web, Google Optimize, Adobe Target, and more are most commonly implemented in this client-side method. It involves adding JavaScript to your website and then round-trip server communications to execute this JavaScript code to alter the page.

Most Optimization tools also offer the ability for server-side implementations. Using this implementation method, your web server or mobile application must directly interface (via server-side requests) with the optimization tool instead of relying on the user’s browser to make the request (via client-side requests). A somewhat similar model to server-side requires that your site leverage a vendor’s proxy server to alter the code sent to the browser.

The Pros & Cons of Server-Side Optimization

There are a number of reasons why you should consider a server-side optimization solution. The primary reasons though are the control over performance and that you can test things that are difficult (but not necessarily impossible) otherwise, such as selective pricing changes or other dynamic content. The reasons not to use server-side optimization though are quite compelling, such as that it often slows your team down since you’ll have to work directly with IT/web developers to execute any tests — they already have enough on their plate and your work may not get prioritized.

Ultimately, there’s a solid place for both client-side and server-side optimization methods, even within the same organization. For more complex tests against dynamic content, consider the server-side approach. For less complex tests where the UX is being altered, consider the traditional client-side approach. Many tools, such as Adobe Target, Google Optimize, and Optimizely Web + Optimizely Full Stack can help you achieve your optimization objectives.

| Pros | Cons |

|---|---|

| Performance (and completely flicker-free) | Requires dependence on developers to setup a test |

| Flexibility to test dynamic content (pricing changes for example) | Slower to deploy tests and often to stop a test |

| Ability to test non-UI changes (performance optimizations; brand new site launch) | |

| Security to limit external JavaScript code onto your website (test in highly secure/sensitive areas) |

Explore These Trends, Plan for the Future

Being aware of current analytics trends is vital to remain relevant and create a competitive advantage in an industry that is constantly changing. As we continue to march forward into 2019, look to see what industry trends continue to emerge and which ones become stagnant.

We’ll be publishing future posts that dive deeper into a few of these digital analytics industry trends, so look out for those. Are there other trends or technologies you are evaluating that you’d like to learn more about, or others that you think will increase in popularity? Drop me a comment!